Ruth Kikin-Gil isn't worried about killer robots.

The responsible artificial intelligence (AI) expert is more worried about the other stories she reads about AI. Like the one about journalist Gillian Brockell who kept seeing ads about newborn babies after her own pregnancy ended with a stillbirth. Or stories of how a Michigan man was wrongfully accused of committing a crime because of faulty facial recognition software. Or even stories of how the technology used in some self-driving cars doesn’t recognize dark-skinned pedestrians.

“You can’t just put these models and features out there and say, ‘It’ll be fine’, because it might not be fine. You need to nurture it, monitor it and make sure it’s doing the things you want it to do,” said Kikin-Gil, a responsible AI strategist and senior user experience (UX) designer at Microsoft.

“The common discourse around AI is that it’s a hard technical problem, and the truth is it’s also a hard human problem because it touches everyone in our society. So we need to ask ourselves, what will help us take a human-centred approach when we’re doing it? What are the things we need to do to get there? Because if we don’t do that, some not so good things happen.”

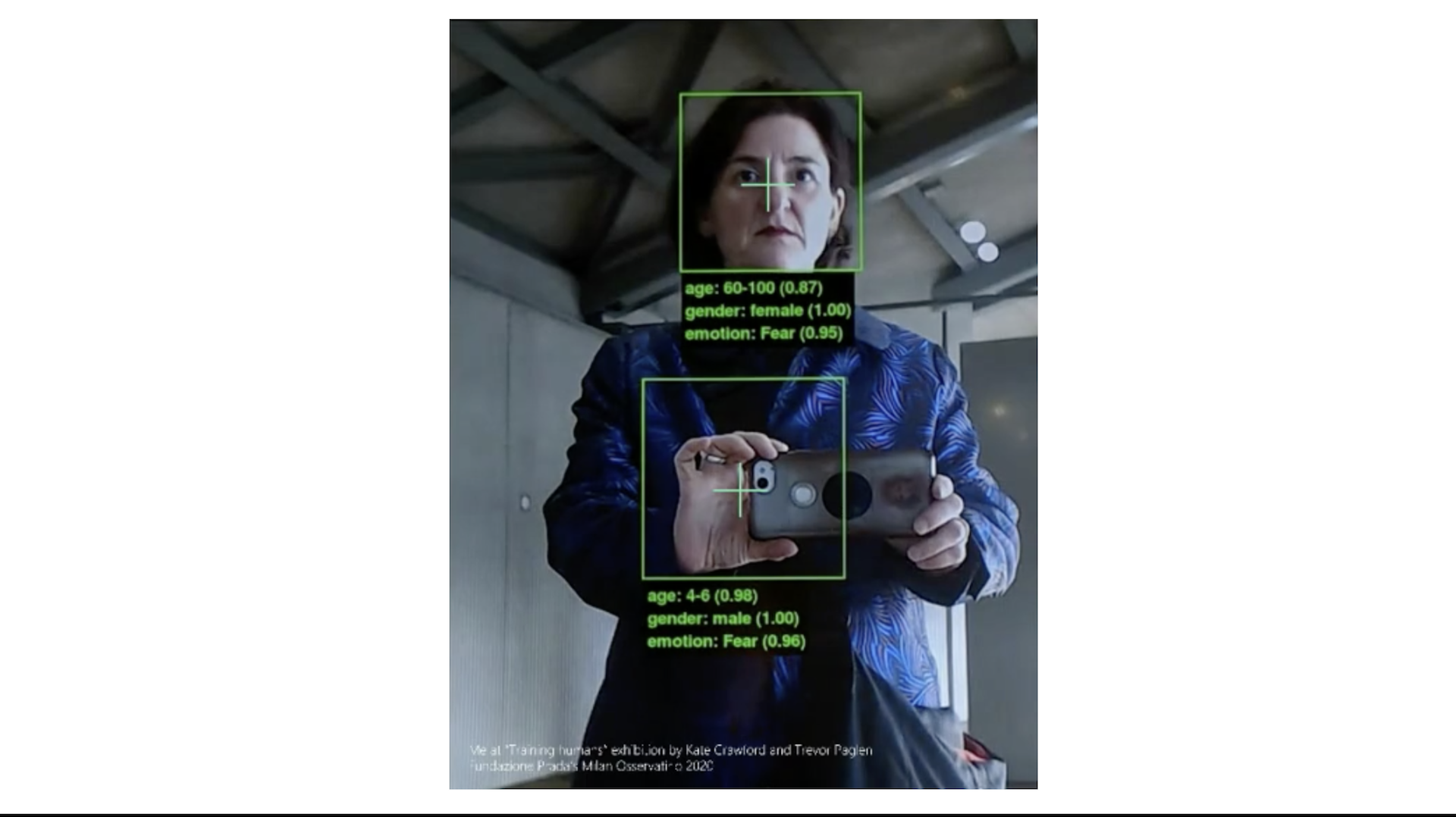

She referenced MIT researcher Joy Buolamwini’s work with the Algorithmic Justice League and facial recognition software that started a movement, the Gender Shades project, which found that some facial recognition software couldn’t recognize the faces of Black women, essentially erasing them. Afterward there was a lot of work done to improve algorithms and recognize people with dark skin. But it’s not that simple, said Kikin-Gil.

“When we are talking about the big bad robots that are going to kill us, this is not what concerns me,” she said. “What concerns me is that we’re going to die by neglect because there’s all these things happening and no one is looking at them and saying ‘let's stop.’ ”

That’s where responsible AI comes in. Nearly 90 per cent of companies across various countries have encountered ethical issues from using AI, said Kikin-Gil, quoting a 2019 survey done by Capgemini Research Institute. But that same survey showed that when companies use AI ethically, consumers trust them more.

Guidelines for human AI interaction

As part of her role, Kikin-Gil worked with a group of leaders at Microsoft to develop 18 guidelines for human AI interaction and a toolkit that other organizations can use. She presented the guidelines at July’s uxWaterloo virtual meetup and talked about how organizations can adopt them in their own work. (uxWaterloo is the local chapter of the Interaction Design Association and a Communitech Peer 2 Peer group. Communitech recently released a statement on ethical AI.)

Responsible AI is not just about data science, said Kikin-Gil. It’s about all disciplines working together to recognize problems and try to solve them. Each discipline brings with it a different point of view and can think about different things that can either go wrong or be fixed, said Kikin-Gil. So any guidelines around responsible AI that organizations develop should include a cross-discipline team.

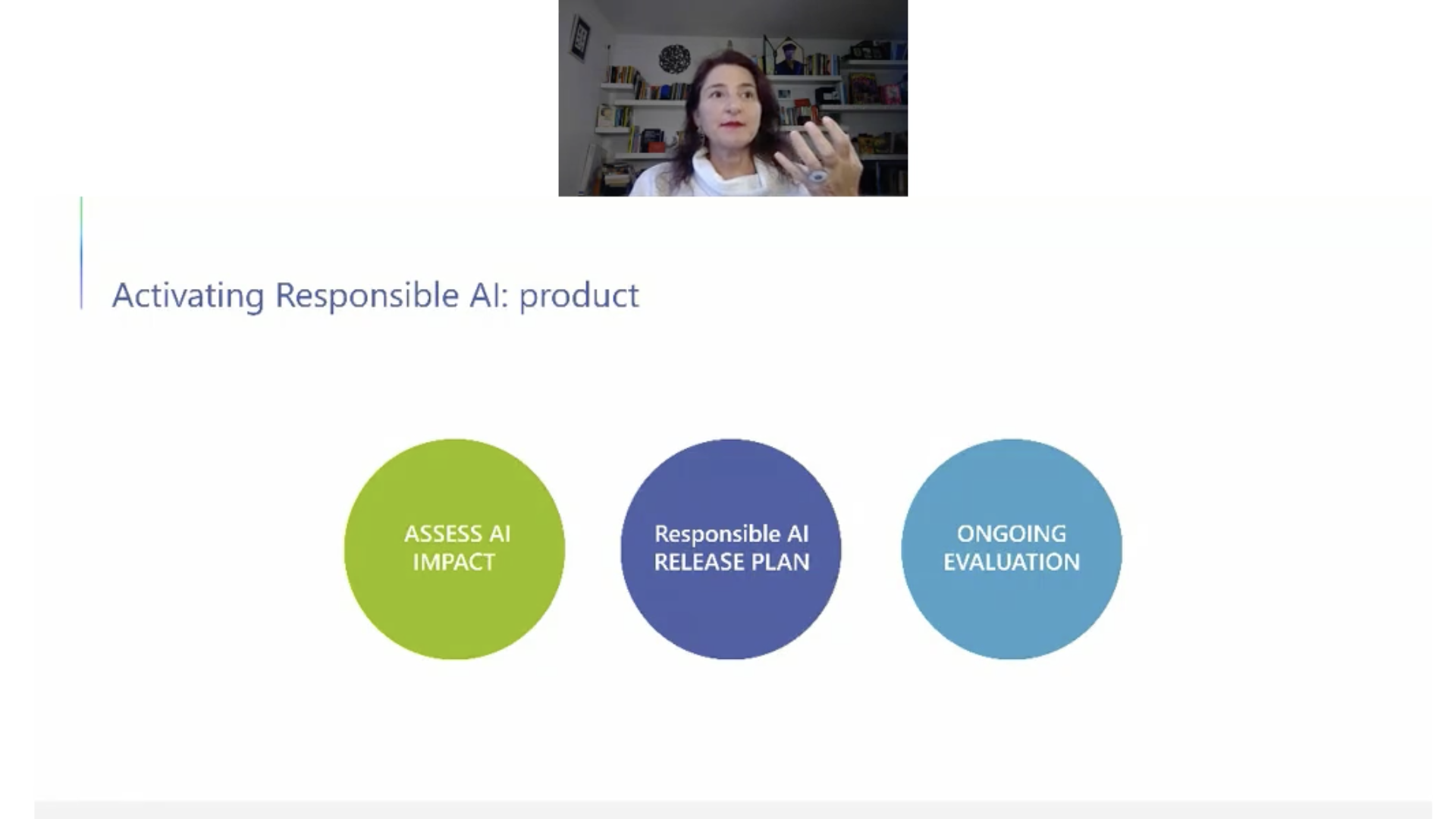

Microsoft thinks about responsible AI throughout the product life cycle and other organizations should too, she said. Think about the impact of that AI product, create a responsible AI release plan, and evaluate things on an ongoing basis, said Kikin-Gil.

(Ruth Kikin-Gil appears on video above a slide that says, "Activating responsible AI: product. Assess AI impact. Responsible AI release plan. Ongoing evaluation.")

Microsoft has also built a responsible AI leadership group that doesn’t just know what responsible AI is, but educates the team about it, raises issues the team needs to think about, discusses which methods and mindsets are available to work with, and puts those in place in the product teams.

Think through responsible AI from a design perspective, using human-centred design guidelines. “Good design can mitigate a lot of issues,” said Kikin-Gil.

Users trust companies who follow AI guidelines

After Microsoft created the guidelines, it was interested in learning what users thought when a guideline was applied and when it wasn’t. So the company ran studies that tried to assess the guidelines' impact on business goals and success. The study took users through a series of experiments to understand how the guidelines influenced their perception of a product when the guideline was violated or followed.

“What we found was features that applied the guidelines consistently performed better in metrics connected to successful products. People tended to trust them more, they felt connected and they felt more in control,” said Kikin-Gil.

Microsoft started auditing the user experience of different products based on responsible AI guidelines by helping the team learn about them, practicing them and then doing a bugbash where everyone on the team goes through scenarios and tries to break the system to find bugs and then file them. The goal, among other things, was to align the user experience with the guidelines, practice looking for responsible AI experience bugs, and promote cross-discipline collaboration for a product, said Kikin-Gil. More importantly, they wanted to make sure the team understood what they were doing, what issues they were fixing, and why they were important, she added.

“AI is awesome and has so much potential. But it’s also a hard human problem and we need to really pay attention and take really good care of the products we’re putting out in the world and not expect things to resolve by themselves,” said Kikin-Gil.

“If AI is the electricity, then responsible AI is the insulation tape to make sure that all this great power is really landing in the places it needs to and doesn’t electrify anyone.”