Each month we host the Zeitspace Sessions on topics that we think are both interesting and a benefit to our local community. We cover design and tech subject matter and the October 2017 session was An Intro to Voice UIs and Assistant Apps. We’re releasing the materials that the Zeitspace team of Fabio, Hok, Jesse and Thomas created so you can do the lab on your own.

But first, before diving into the lab materials, here’s a brief summary of what we talked about in our session

There have been many UI driven shifts in computing: cards to prompts, prompts to GUIs, desktop to mobile, and mobile to invisible UIs like voice. Even though Siri was released over 7 years ago, it’s only recently that Voice UIs and Assistant Apps have become mainstream. Assistants Apps enable people to interact with services anytime and anywhere on a growing range of devices: phone, web, watches, cars, and in-home devices like Alexa, Apple Homepod, and Google Home. South Park recently had an episode that featured Alexa. The episode caused havoc for anyone watching the show with an Alexa in ear/mic shot.

South Park causing terror for Google Home and Alexa owners.

South Park causing terror for Google Home and Alexa owners.

The foundation of Voice UI and Assitant Apps is machine intelligence. Natural Language Processing (NLP) enables applications to analyze and understand human language. Users can speak naturally to a Voice UI-based application rather than just barking known scripted commands. AI based systems enable applications to have conversations with users and interpret and action their commands through systems like chat, text or voice.

Throughout the lab materials, we’ll be frequently using the following terms:

- Agent: a collection of intents that, together, form a conversational interface

- Intent: a mapping between what a user says and what action should be taken

- Action: what to do when an Intent is triggered (e.g. run a function) There are many platforms to develop Assistant Apps: Alexa Skills Kit, Apple SiriKit, and Actions on Google. For this lab, we’ll be building a ToDo Assistant App using Google services.

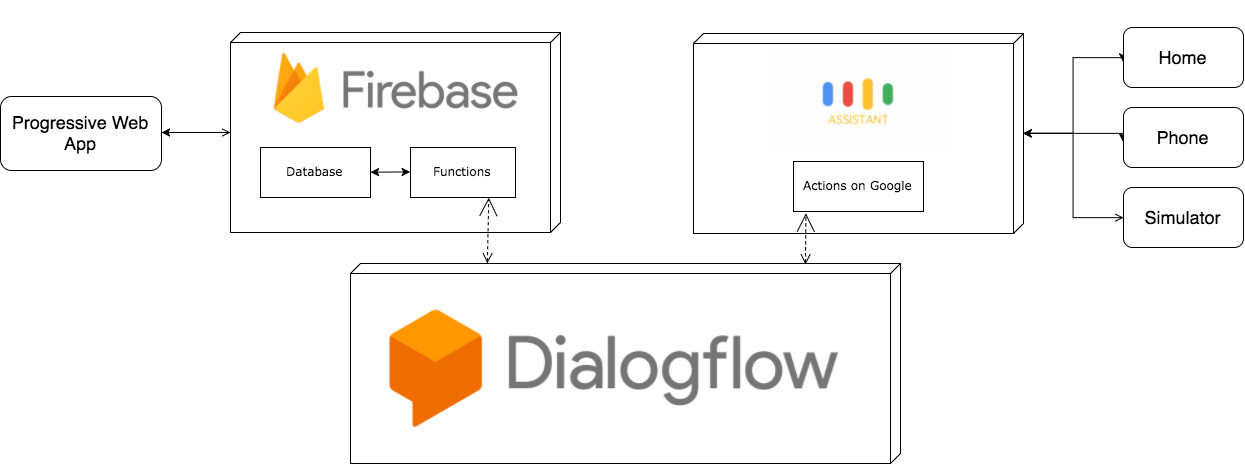

Dialogflow, which up until recently was called API.AI, is where we’ll create our Intents and connect them to our Actions. For, ease of development, our Actions will be hosted using Firebase Cloud Functions. We’ll also use the Firebase Realtime Database to store our ToDo items.

We will use Actions on Google to build a test app for Google Assistant and simulate the device experience. This also provides us with a similator to test and debug our Agent and enables us to deploy the ToDo app on a phone running Google Assistant or a Google Home device.

In our Zeitspace Session we also showed a Progressive Web App to demonstrate how an Assistant App could feed other applications. For this self-directed lab we won’t be getting into that.

With an understanding of common terms and the ToDo App architecture, you’re now ready to start the lab. We hope you enjoy building your Assistant App as we did putting the session together. All the steps as well as code checkpoints if you get stuck are available from the GitHub link below.