A Slack notification pops up on your screen: a new pull request (PR) to look at. You click on the link and your browser opens Github. As you read over the change, you type a few comments. Why did you do this here? Will this need changes to the API? Where are you cleaning up this object?

Within minutes, your colleague has replied and updated the PR. Her changes look good so you push the code to master and get back to your work. She works in another time zone, the work was done asynchronously and remotely, and there is a record of everything — this is all normal. But what we consider normal with respect to software reviews has changed over time.

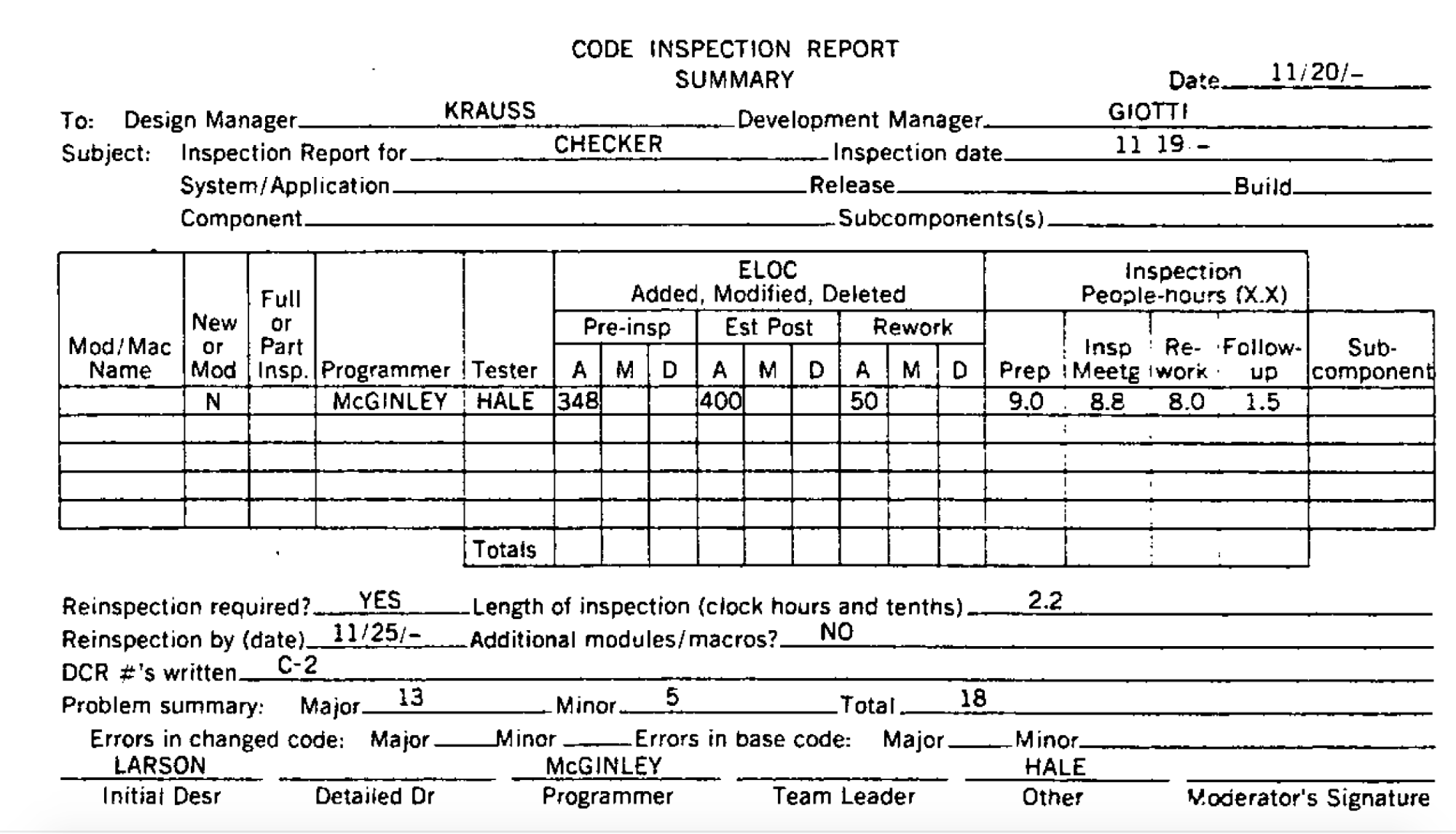

In 1976, Michael Fagan worked at IBM. He wrote a paper describing a process for reviewing code in a structured and formal way. The process involved scheduled, in-person, facilitated walkthroughs of code throughout the software development cycle. There were forms to fill out and rules about who should attend. To be sure, these were not casual events. These sorts of reviews are now referred to as “Fagan inspections” and while they haven’t been widely used in decades, they give us a glimpse into how much software reviews have changed.

(An example of a code inspection report as shown in Fagan’s 1976 paper, “Design and Code Inspections to Reduce Errors in Program Development”.)

If you studied architecture, art, or industrial design in school you were likely exposed to critique. Critique is one element of a “studio approach” which brings design students together in a common space. In a studio, you can see each other’s work unfold, establish your own workspace, and receive guidance from a senior studio member or teacher. There are opportunities to talk with other students and learn to give and receive feedback as part of critique. The goal of critique is to assess the work — not the designer — relative to its objectives.

At Zeitspace, the UX designers meet twice a week to critique our work. We use the approach described in Discussing Design by Adam Connor and Aaron Irizarry and it has worked well for us. With this approach, we discuss the design using this structure:

- What is the objective of the design?

- What elements of the design are related to the objective?

- Are those elements effective in achieving the objective?

- Why or why not?

We ask the designer a lot of questions about their thought process (“Why did you do …?”, “How would this work when…?”). We try not to prescribe solutions — this is critique, not design.

Based on our success with design critique, we asked: Could modern software development incorporate critique to help with software quality? If we’ve moved beyond Fagan inspections, have we moved too far, losing value provided by its rigour in the process?

To learn more about our own software quality model, we interviewed developers across several Zeitspace project teams. We asked them about their process, tools, what they thought worked well, and what might be improved. We also asked them about their experience with group problem solving (everything from peer programming to team whiteboard sessions to critique itself). We learned that:

- People value training and discussion around craft and best practices.

- People value planning reviews to mitigate risk of technical debt or architectural flaws.

- The development process varies across individuals, teams, and clients.

- Developers feel empowered to build and use that which they need.

- How we measure software quality is not consistent across teams.

There were some concerns raised during the interviews. While some related to the critique process more generally, others were specific to software development; these were the ones we were excited to explore further.

- “We need to learn a lot about a project before we can successfully critique it.”

- “Critique may not work for all types of software challenges.”

- “Critique may not be valuable if always done with the same team members.”

- “People won’t critique properly.”

- “If there are too many people or a long introduction, not everyone will be able to contribute during a critique session.”

- “Developer critique may degrade into arguing; people can get heated!”

We’ve already dealt with some of these concerns during our design critique sessions. And we felt we could come up with reasonable approaches to address the others.

|

Concern |

Approach |

|

We need to learn a lot about a project before we can successfully critique it. |

|

|

Critique may not work for all types of software challenges. |

|

|

Critique may not be valuable if always done with the same team members. |

|

|

People won’t critique properly. |

|

|

With too many people or a long introduction, not everyone will be able to contribute during a critique session. |

|

|

Developer critique may degrade into arguing; people can get heated. |

|

With what we’ve learned from reviewing the literature and conducting interviews, we think we can apply critique to software development at Zeitspace. We want to do a few things differently for software critique than we do for design critique, though:

- Evaluate. Software critique is fairly new for us, so we want to invest in tools to measure its impact on the team and the software.

- Train. Several members of our design team have run critique workshops. Introducing the software team to critique in a similar fashion is a good way to get everyone on the same page.

- Facilitate. Facilitating a critique session takes the pressure off participants. An experienced facilitator can more easily (and gently) remind folks of best practices.

- Reflect. By using active reflection at the end of critique sessions and retrospectives when appropriate, we can be more deliberate about learning what is and what isn’t working. We can look for patterns and document what we’ve learned.

Modern teams develop software much differently than they did 40 years ago. Of course the tools we use have changed, but so have our priorities. We value lean. We are more likely to put something out into the world that we know isn’t complete or perfect, confident in our ability to iterate quickly. We have teams that are spread across the world.

Over the next while, Zeitspace is giving software critique a try. Software is big — everything from architectural design to a line of code — so this is a bit of an adventure. In our first critique, software developers looked at an architecture to support various languages. Developer Joe Maiolo, who is working on an app for a Zeitspace client, wanted it to be scalable and able to easily add languages. Everyone asked questions, no one offered solutions. In all, it was a good first foray into critique for the software developers at Zeitspace. Check in with us in a bit and we’ll be sure to share what we learn.

—

Bull, C., Whittle, J., & Cruickshank, L. (2013). Studios in software engineering education: Towards an evaluable model. Proceedings of the 2013 International Conference on Software Engineering, 1063-1072.

Connor, A., & Irizarry, A. (2015). Discussing design: Improving communication and collaboration through critique. Sebastopol, CA: O’Reilly Media.

Fagan, M.E. (1976). Design and Code Inspections to Reduce Errors in Program Development. IBM Systems Journal, 15(3), 182-211.